The article below originally appeared on IRI's blog and is being re-published here to show Townsend Security's blog readers how Alliance Key Manager integrates with IRI FieldShield.

In a previous article, we detailed a method for securing the encryption keys (passphrases) used in IRI FieldShield data masking jobs through the Azure Key Vault. There is now another, even more robust option for encryption key management available, thanks to API-level integration between FieldShield and the Alliance Key Manager (AKM) platform from Townsend Security.

AKM provides the security of authenticated access to FieldShield passphrases from five different server options (below). They assure that only authorized users can access the AKM key server and obtain the keys to decrypt FieldShield-encrypted field data (column values).

But beyond authentication, AKM provides a complete encryption key management solution which includes: key server setup and configuration, key lifecycle administration, secure key storage, key import/export, key access control, server mirroring, and backup/restore. AKM also supports compliance audit logging of all server, key access and configuration functions.

How AKM Works with FieldShield

AKM is leveraged directly in FieldShield data masking jobs through field syntax that specifies the use of AKM. This syntax is “AKM:KeyName”, where “AKM:” invokes the use of the Alliance Key Manager, and “KeyName” (an example key name created by AKM but could be anything) is the name of a key created by AKM from which the value you want will be accessed.

In a FieldShield decryption job, key retrieval from AKM is performed via a secure TLS connection to the AKM server. Both the client (FieldShield user) and server (AKM) end-points are authenticated via TLS.

AKM can be deployed in: 1) VMware; 2) a cloud server in Microsoft Azure; 3) Amazon Web Services; 4) a privately managed Hardware Security Module (HSM); or, 5) a dedicated cloud HSM.

Setting Up

Prerequisites for using AKM to manage encryption key passphrases in FieldShield are:

- A compatible Linux OS (a Windows version is planned)

- A licensed IRI FieldShield installation for Linux under /usr/local/cosort

- An AKM instance with connectivity to the Linux OS

- A .conf file configured with the proper details to connect to AKM from the Linux OS

- The Alliance Key Manager Linux SDK

To run FieldShield, obtain and install license keys from IRI. To run AKM, obtain a license from Townsend Security.

You will need to create a configuration (.conf) file to provide the connection information for AKM. The file includes the locations of certificates, logging options, and AKM connection properties.

The configuration file must be specified correctly, placed in the /usr/local/cosort/etc directory, and called keyclient.conf in order for key retrieval to succeed. Once that’s done, AKM will be accessible and work properly from any of the 5 deployment methods listed above.

You will also need to download the AKM Linux SDK. It contains the packages used to install the Linux libraries for AKM key retrieval used in FieldShield, and a sample keyclient.conf file (shown later).

The AKM Linux SDK

FieldShield makes use of shared libraries provided by Townsend Security to integrate with AKM. More specifically, FieldShield uses the Linux C SDK, which provides tools for integrating C applications with AKM in Linux.

There are debian (or rpm, depending on Linux distribution) packages within the packages directory of the Linux directory of the Linux SDK that must be installed on your system for the FieldShield-AKM integration to work. Confirm (or put) the shared object library (.so file) in the /usr/lib directory.

The AKM Linux SDK contains packages for the following Linux platforms:

- RHEL/CentOS 4, 5, 6, 7

- SLE 11 SP2, SP3, SP4

- Ubuntu 12.04, 14.04, 16.04

The Ubuntu 16.04 package in the AKM Linux SDK was tested and confirmed to work on Ubuntu 18.04.

Configuring AKM for FieldShield Use

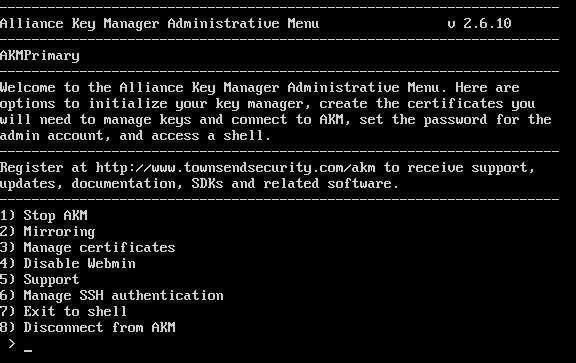

AKM can be deployed in a variety of ways, including through cloud computing providers and local virtual machines. To setup AKM initially, follow the instructions in the documentation and log-in to the administrative menu to initialize AKM and create and manage certificates for user authentication.

The AKM instance has a key server at port 6001, a port for key retrieval at port 6000, and a web interface at port 3886. This information must be put into the .conf file so that FieldShield can find the AKM and retrieve the key at decryption time.

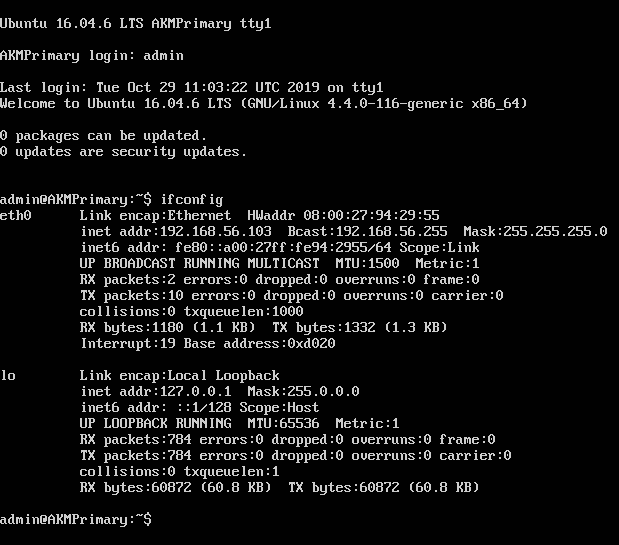

After logging in to AKM, the IP address of the AKM instance can be found by typing ifconfig:

Again, the default port is 6000 for AKM key retrieval. This should be written in the .conf file like this:

|

[ip] KeyStoreIpPort=IP:Port |

Where IP is the IP address of the AKM, and Port is the port number used for key retrieval. For example:

|

[ip] KeyStoreIpPort=192.168.56.20:6000 |

A complete .conf file could look something like this:

|

; Configuration file for Universal Key Retrieval API |

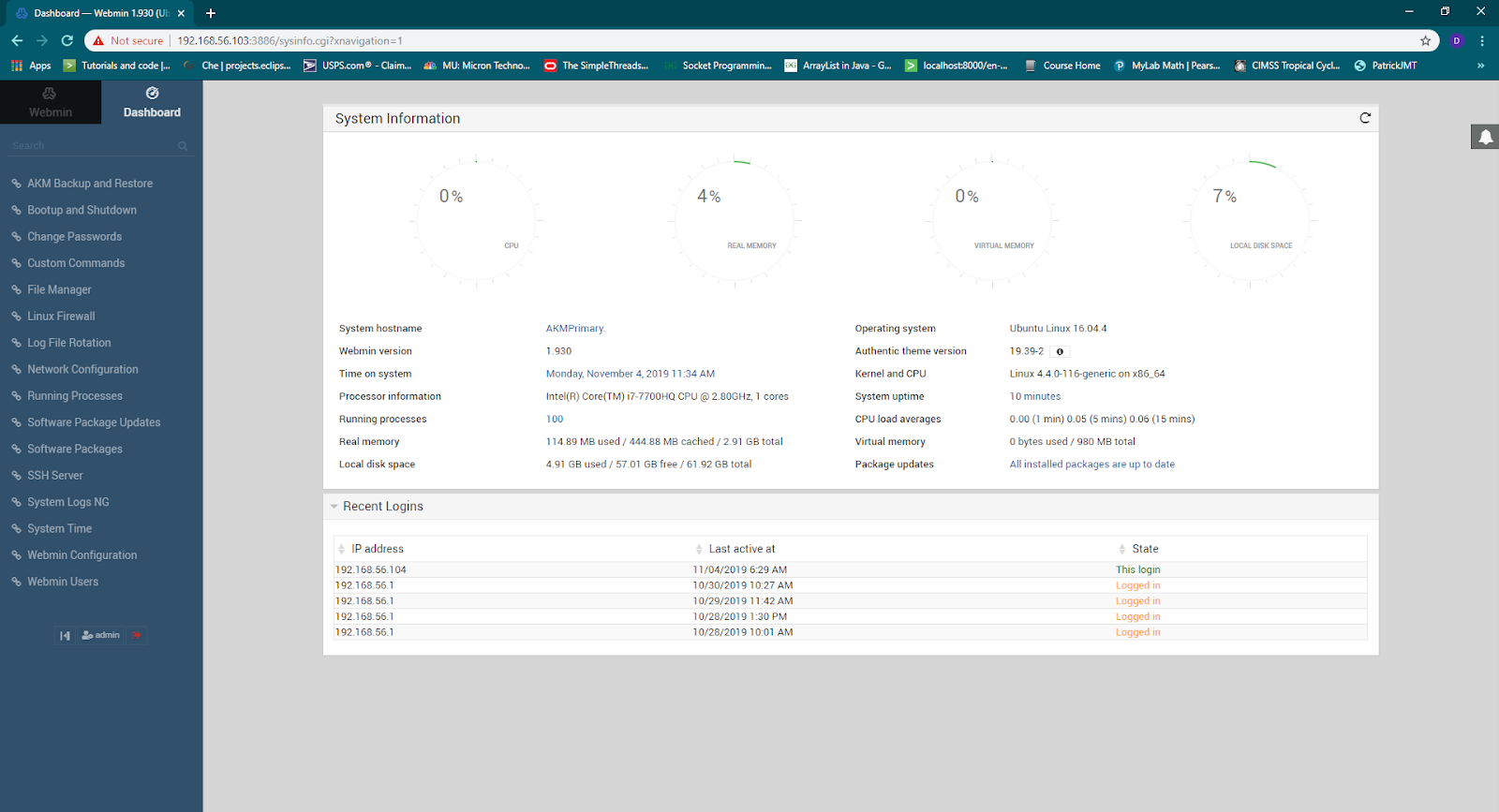

AKM Web Interface (webmin)

The AKM Server web interface (or webmin) monitors AKM performance and login or access attempts, and allows access to the AKM file browser. Many settings can be modified through a secure web interface:

Dashboard menu in the AKM ‘webmin’ web interface

Dashboard menu in the AKM ‘webmin’ web interface

From the file manager in the web interface, full file system access to AKM is available. In the /home/admin/downloads directory, all certificates and private keys should be available in zipped folders.

The certificates and private key should be in the .pem format and stored in the pem folder within the zip folder with the name of the user (rather than the admin1 or admin2 folders). The date value is the day of the month that the folder was created during initialization of the AKM server.

There is also the ability to access logs from AKM, set logging options and IP access control for the web interface, start/stop AKM, enable two-factor authentication for the web interface, check running processes in AKM, and more, all from within webmin.

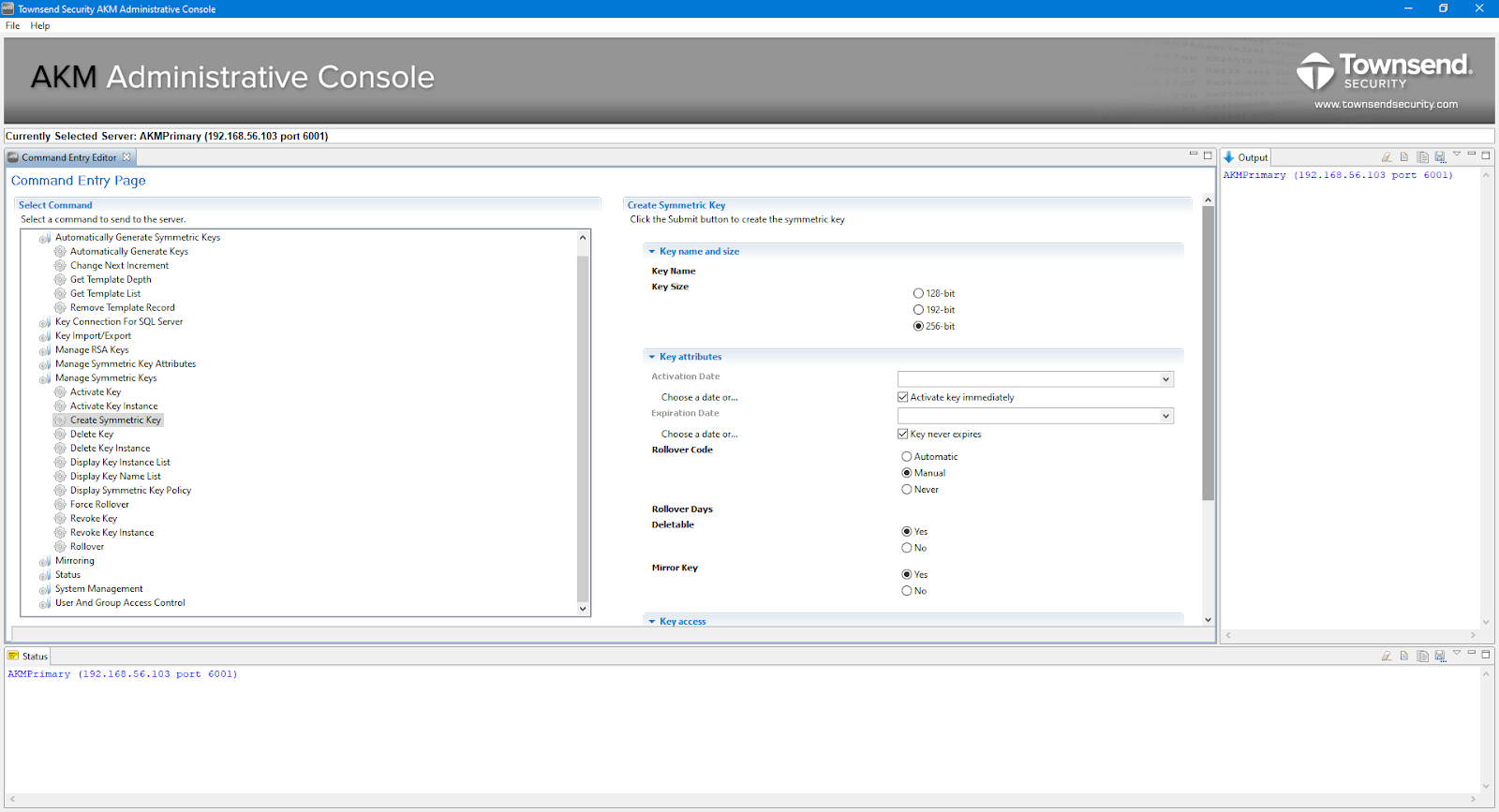

Creating and Using FieldShield Keys

AKM provides options for creating, securing, and managing encryption keys through the AKM Administrative (Admin) Console app for Windows. Consult the AKM Crypto Officer documentation for current information on creating keys through the AKM Admin console app.

FieldShield only supports 256-bit symmetric keys from AKM, known as AES256 keys. This provides the best combination of security and performance.

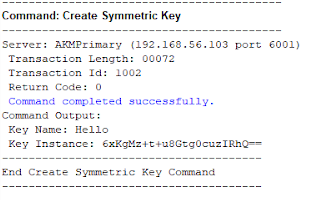

Otherwise, select the rest of the options as desired and click the submit button to generate an encryption key. The output should be similar to this:

Alternatively, when initializing AKM, a set of encryption keys can be automatically generated. A prompt appears at AKM initialization asking if an initial set of encryption keys should be generated or not.

The encryption keys you create in AKM at initialization, or through the AKM Admin Console application, will serve as passphrase values in FieldShield target /FIELD specifications that encrypt or decrypt values at the field or column level. For example, this statement:

|

/FIELD=(Encrypted_CCN=enc_aes256_fp_alphanum(CCN, AKM:AES256), TYPE=NUMERIC, POSITION=12, SEPARATOR=”|”, ODEF=CCAcctNum) |

will encrypt the CCAcctNum in the 12th column of the source database table with 256-bit AES alphanumeric format-preserving encryption using the key created inside AKM under the name AES256.

What’s actually happening? FieldShield will use a base64-encoded stream of characters (a key value) retrieved (derived) from AKM that are associated with that AKM key name. That stream then gets used by FieldShield as a new passphrase value.

It’s that new passphrase value that is then used by FieldShield (like before AKM) to derive the actual encrypt/decrypt key used at FieldShield runtime. So in other words, AKM involves a double derivation.

If you want to use a different AKM key name in another /FIELD statement to differentiate your encrypt/decrypt keys, use the AKM Admin Console to create another key under a different name. Reflect that new name into your FieldShield job script in the appropriate /FIELD statement.

To decrypt in this case, a corresponding decryption statement in a subsequent FieldShield job script would need to specify the dec_aes256_fp_alphnum function with the same passphrase to restore the original CCAcctNum value. This method will work with any FieldShield-included encryption or decryption algorithm.

Example Operation

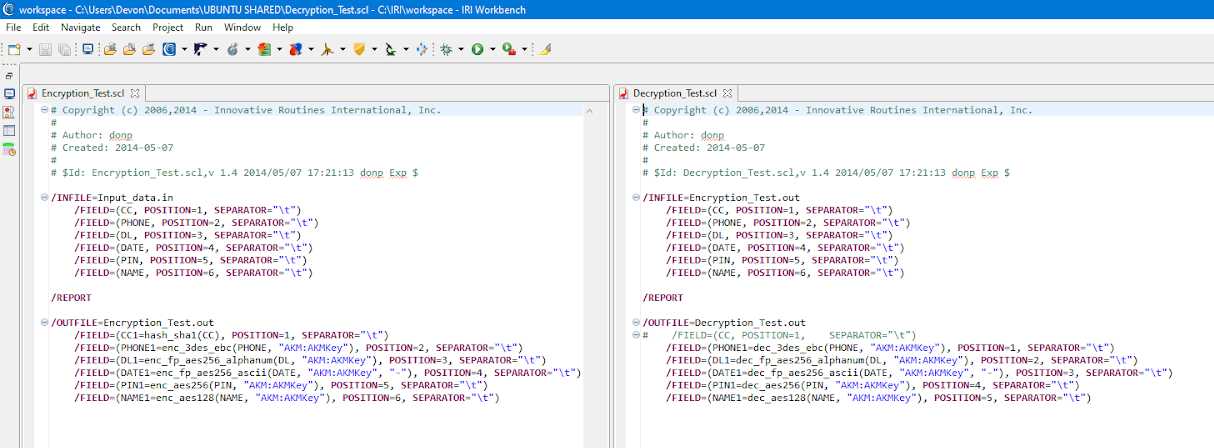

Here is a look at the FieldShield encrypt (left) and decrypt (right) job scripts used:

Note the syntax for specifying AKM use, which is “AKM:KeyName”. Make sure that the key name is properly spelled. Key names that do not exist on the connected AKM instance will result in a Tcpconnect error.

AKM will attempt to retrieve the key 5 times, each with a timeout of 5 seconds, as specified in the default .conf file. If the key is ultimately unable to be retrieved, then the job will not run.

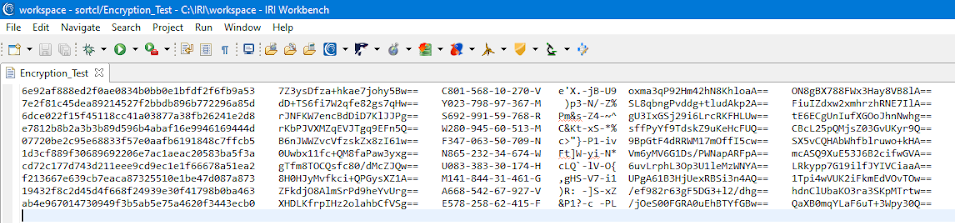

Here is an image of data from this example that FieldShield encrypted using AKM:

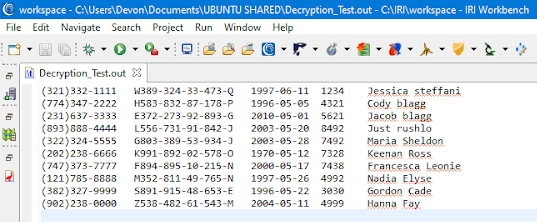

Here is an image of the data after running FieldShield and the key in AKM to decrypt it:

The bottom line: Using AKM to store the passphrases used for decrypting data in FieldShield dramatically enhances encryption key security and industry compliance levels for data masking operations. Through key authentication and secure key management facilities, AKM can help FieldShield users close off more potential gaps in enterprise data security.

Yes, File Integrity Monitoring (FIM) has been part of the distributed computing landscape for a few years now. And yes, real-time enterprise security monitoring is harder to accomplish in a mainframe environment. But as attacks become more sophisticated, FIM needs to be a key component of the entire network, including your mainframe.

Yes, File Integrity Monitoring (FIM) has been part of the distributed computing landscape for a few years now. And yes, real-time enterprise security monitoring is harder to accomplish in a mainframe environment. But as attacks become more sophisticated, FIM needs to be a key component of the entire network, including your mainframe.

In Microsoft's SQL Server Enterprise edition 2008/2012 you have access to Extensible Key Management (EKM). When EKM is enabled, SQL Server users can use

In Microsoft's SQL Server Enterprise edition 2008/2012 you have access to Extensible Key Management (EKM). When EKM is enabled, SQL Server users can use  AKM fully supports the path to column level encryption within the Oracle 10g and 11g environments. Again your approach will include making coding changes to your application layer to perform key retrieval from AKM. To help you with this on the Oracle front we provide some PL/SQL sample code for you to work from.

AKM fully supports the path to column level encryption within the Oracle 10g and 11g environments. Again your approach will include making coding changes to your application layer to perform key retrieval from AKM. To help you with this on the Oracle front we provide some PL/SQL sample code for you to work from.

The Health Insurance Portability and Accountability Act (HIPAA) of 1996 establishes and governs national standards for electronic health care transactions. According to the website of the U.S. Department of Health and Human Services:

The Health Insurance Portability and Accountability Act (HIPAA) of 1996 establishes and governs national standards for electronic health care transactions. According to the website of the U.S. Department of Health and Human Services:

It is common knowledge in the IT world that the threat of a data breach is now greater than any other time in the history of technology. Since late 2007, the amount of personal information that has been exposed through data breaches is alarming. According to the Identity Theft Resource Center, over 30 million Americans have been victims of data breaches. This is not withstanding the fact that these statistics only count breaches that have been reported.

It is common knowledge in the IT world that the threat of a data breach is now greater than any other time in the history of technology. Since late 2007, the amount of personal information that has been exposed through data breaches is alarming. According to the Identity Theft Resource Center, over 30 million Americans have been victims of data breaches. This is not withstanding the fact that these statistics only count breaches that have been reported.

It is important for businesses of all sizes running on SQL servers to encrypt any sensitive data that they store or move. Although business size can determine specific compliance requirements that need to be met, all companies handling sensitive data are vulnerable to the major risk of failing a security audit if their data isn’t properly secured on their SQL servers.

It is important for businesses of all sizes running on SQL servers to encrypt any sensitive data that they store or move. Although business size can determine specific compliance requirements that need to be met, all companies handling sensitive data are vulnerable to the major risk of failing a security audit if their data isn’t properly secured on their SQL servers.

A great example of an accidental data breach recently took place in South Carolina where a Medicaid employee transferred several spreadsheets of sensitive patient data to a personal email account. This kind of data breach could have exposed hundreds of thousands of patients to possible theft of Social Security numbers, Medicaid ID numbers, addresses, phone numbers, and birthdates.

A great example of an accidental data breach recently took place in South Carolina where a Medicaid employee transferred several spreadsheets of sensitive patient data to a personal email account. This kind of data breach could have exposed hundreds of thousands of patients to possible theft of Social Security numbers, Medicaid ID numbers, addresses, phone numbers, and birthdates.

Any way you look at it, 2011 was a very bad year for database security. From the high-profile (and highly embarrassing) series of attacks on Sony's PlayStation Network, to the less-publicized Epsilon breach which was described by the

Any way you look at it, 2011 was a very bad year for database security. From the high-profile (and highly embarrassing) series of attacks on Sony's PlayStation Network, to the less-publicized Epsilon breach which was described by the